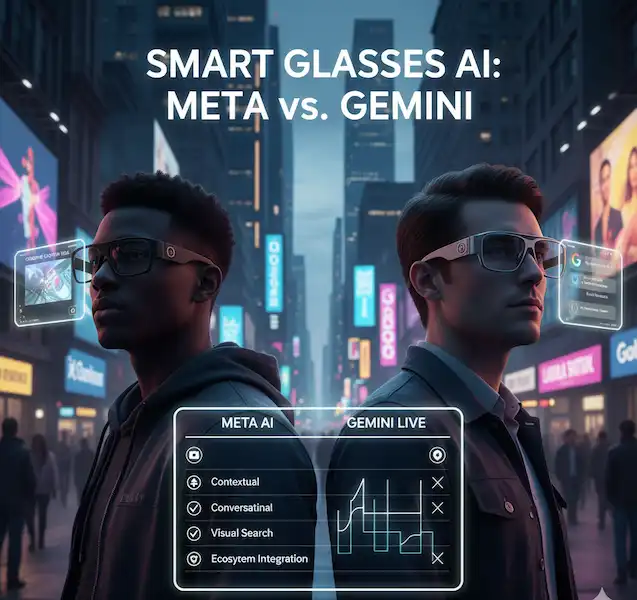

Smart Glasses AI Comparison Meta AI vs. Gemini Live

The future is here, and it’s worn on your face. Smart glasses are poised to revolutionize how we interact with the digital world, and at the heart of this transformation lies artificial intelligence. With Meta AI and Google’s Gemini Live (powering Android XR), two major players are vying for dominance. For prospective smart glasses buyers, understanding the nuances of these AI platforms is crucial. This article dives deep into a feature-by-feature comparison, offering insights, examples, and helpful content to guide your decision.

The Dawn of Contextual Computing

Smart glasses aren’t just about displaying information; they’re about understanding your world and providing relevant assistance in real-time. This is where contextual computing, powered by advanced AI, truly shines. Imagine your glasses not just showing you directions, but knowing you’re looking at a specific restaurant and offering a quick review. This level of seamless integration and proactive assistance is the promise of these platforms.

Meta AI: Your Proactive Digital Companion

Meta AI, currently integrated into Ray-Ban Meta smart glasses, aims to be a constantly learning and adapting digital assistant. Its strength lies in its ability to understand visual and audio cues from your environment, offering proactive suggestions and information.

Key Features & Examples:

- Real-time Object Identification: Point your gaze at an object, and Meta AI can often identify it, providing quick facts or links.

- Example: You’re admiring a unique plant in a park. “Hey Meta, what kind of plant is this?

Meta AI instantly identifies it as a Bird of Paradise, displaying its name and origin.

- Contextual Information Retrieval: Asking questions about your immediate surroundings often yields highly relevant results.

- Example: You’re at a historical landmark. “Hey Meta, tell me about this building.” Meta AI pulls up key historical facts and architectural details.

- Multimodal Input Understanding: Combining voice commands with visual cues for more complex requests.

- Example: You’re trying to figure out how to operate a new coffee machine. “Hey Meta, how do I use this?

Meta AI provides step-by-step instructions overlaid on your view of the machine.

- Creative Assistance: Generate captions, translate languages, and even help brainstorm ideas based on what you’re seeing.

- Example: You’ve just taken a great photo. “Hey Meta, give me a funny caption for this picture.”

Gemini Live (Android XR): Google’s Omniscient Assistant

Google’s Gemini Live, as part of the Android XR ecosystem, leverages Google’s vast knowledge graph and AI prowess. It aims for ubiquitous access to information, seamless integration with other Google services, and advanced language understanding, making it an incredibly powerful tool for smart glasses.

Key Features & Examples:

- Advanced Conversational AI: Gemini Live excels at natural language understanding, allowing for fluid and complex conversations.

- Example: “Hey Google, what’s the weather like for the soccer game tonight, and will it affect the traffic if I leave in an hour?” Gemini Live provides a detailed forecast and real-time traffic predictions.

- Google Search and Information Retrieval: The power of Google Search is literally at your fingertips (or rather, your gaze).

- Example: You’re reading a book and encounter an unfamiliar word. “Hey Google, define ‘ephemeral’.” The definition appears directly in your field of view.

- Translation and Language Learning: Real-time translation is a significant advantage, breaking down language barriers.

- Example: You’re traveling abroad and looking at a menu in a foreign language. “Hey Google, translate this menu for me.” The menu text is translated and overlaid onto your view.

- Integration with Google Ecosystem: Seamless connectivity with Google Maps, Calendar, Gmail, and other services.

- Example: You receive a notification for an upcoming meeting. “Hey Google, show me the quickest route to my 2 PM meeting.” Directions are displayed in your vision.

- Visual Search and Lens Integration: Building on Google Lens’s capabilities, Gemini Live can identify objects, landmarks, and even text in your environment.

- Example: You’re trying to identify a specific type of car. “Hey Google, what car is that?”

Gemini Live identifies it as a Chevrolet Corvette C3 Stingray and provides key specifications.*

Feature Comparison Chart

| Feature / AI Platform | Meta AI (Ray-Ban Meta) | Gemini Live (Android XR) |

| Primary Focus | Proactive environmental assistance, social sharing | Ubiquitous information access, Google ecosystem integration |

| Object Identification | Good (visual) | Excellent (visual, Google Lens powered) |

| Conversational AI | Good | Excellent (advanced NLU) |

| Real-time Translation | Limited (focus on creative) | Excellent (built-in Google Translate) |

| Google Services Integration | Limited | Deep and seamless |

| Proactive Suggestions | Strong | Strong |

| Creative Assistance | Yes (captions, brainstorming) | Limited (focus on info retrieval) |

| Multimodal Input | Yes | Yes |

| Offline Capabilities | Limited (relies on cloud) | Potentially stronger (local processing) |

Additional Helpful Content: Considerations for Smart Glasses Users

- Privacy: Both platforms process a significant amount of your personal data (visual, audio, location). Understand their privacy policies and data handling practices.

- Battery Life: AI processing is power-intensive. Consider how much battery life you’ll get with continuous AI use.

- Form Factor: The glasses themselves will dictate comfort and style. The AI is only as good as the hardware it’s on.

- Ecosystem Lock-in: Choosing Meta AI or Gemini Live often means deeper integration with their respective ecosystems (Meta apps vs. Google services). Consider which ecosystem you’re already more invested in.

- Future Development: Both AIs are rapidly evolving. What new features are on the horizon for each platform?

FAQ – Smart Glasses AI Comparison

Q: Can I use both Meta AI and Gemini Live on the same smart glasses? A: Not typically. Smart glasses are usually designed to run on a specific platform (e.g., Ray-Ban Meta glasses run Meta AI, while future Android XR glasses will run Gemini Live).

Q: Which AI is better for everyday casual use? A: It depends on your primary needs. For quick visual queries and social sharing, Meta AI is very intuitive. For broader information access and integration with your digital life, Gemini Live might be more comprehensive.

Q: How do these AIs handle privacy? A: Both Meta and Google have extensive privacy policies. Generally, data is used to improve the AI and personalize experiences. Users typically have controls over data sharing and deletion. It’s crucial to review the specific privacy policies of the device and AI platform you choose.

Q: Will these AIs work without an internet connection? A: While some basic functions might work offline (e.g., pre-loaded commands), the most powerful features, especially those requiring real-time information retrieval or complex processing, will require an active internet connection.

Additional Helpful Links

- Learn about AR vs VR for smart glasses – AR vs VR Glasses: Differences, Uses & Future of Reality

Authoritative External Links

- Meta AI: https://about.fb.com/news/2023/09/ray-ban-meta-smart-glasses/ (Official Meta Blog)

- Google Gemini: https://blog.google/technology/ai/google-gemini-ai/ (Official Google Blog)